SQLite: Vacuuming the WALs

TL;DR

- WAL files can grow to the size of the largest transaction.

- The pragma

wal_checkpoint(truncate)can be used to shrink the WAL file. - A

vacuumcommand will result in the WAL file growing by roughly the number of used pages in the database. During the process, a temporary copy of the database will be be created. - An

incremental_vacuum(0)may result in the WAL file growing by approximately the number of free (‽) pages in the database. - A

vacuumorincremental_vacuumwill not shrink the database until a checkpoint is run. This may be either an automatic checkpoint or a manualwal_checkpoint.

Introduction

SQLite is a serverless SQL database engine which can be embedded within a program. This makes it a popular choice for a variety of use cases including as an application file format.

SQLite advertises that the Write-Ahead Logging (WAL) feature offers better performance than SQLite’s default rollback journal in most scenarios.

Using a database in WAL mode can result in interesting and sometimes surprising disk usage. While likely not impactful on a modern desktop or server, this may be of interest if running on an embedded device.

In an attempt to better understand this behaviour I’ve profiled how the disk usage of the various SQLite files change in a variety of scenarios. Note that performance has intentionally been ignored here, and I don’t doubt that a lot of SQLite’s design decisions have been geared towards improved throughput.

Test Setup

Python’s sqlite3 module was used to script various interactions with an SQLite database. The code and the generated results are available here at the tag v1.0. Fair warning - it’s not the most elegant code. The version of SQLite being used was 3.31.1.

The test script creates a database with the highly original name “test.db” and proceeds to monitor the size of the interesting database files; namely test.db, test.db-wal, test.db-shm, and the temporary directory. The temporary directory was specified to be a known location on disk using SQLITE_TMPDIR. The -shm file was omitted from the graphs below as its behaviour was deemed uninteresting.

The database schema was kept simple, consisting of a single table “Data”:

CREATE TABLE Data (

PrimaryKey INTEGER PRIMARY KEY,

Stuff TEXT

);

Write Ahead Logging mode was of course enabled on the database.

Various “scenarios” of interest were scripted. Each scenario typically starts by populating the database with 100 rows with each added row containing 1 MB of text. Each profiled scenario has been given a numeric identifier. In the titles of the graphs below, the shortened form “S.XX” is used to refer to “Scenario XX”.

Large Transactions with a WAL File

By default, SQLite will try to let the WAL file grow to 1000 pages in size before moving transactions from the WAL file to the original database. This process is known as an automatic checkpoint. Considering the default page size is 4096 B, it shouldn’t be unusual to see the WAL file size of a busy database sitting around 4.1 MB. The SQLite documentation lists cases where the WAL file can grow well beyond 4 MB.

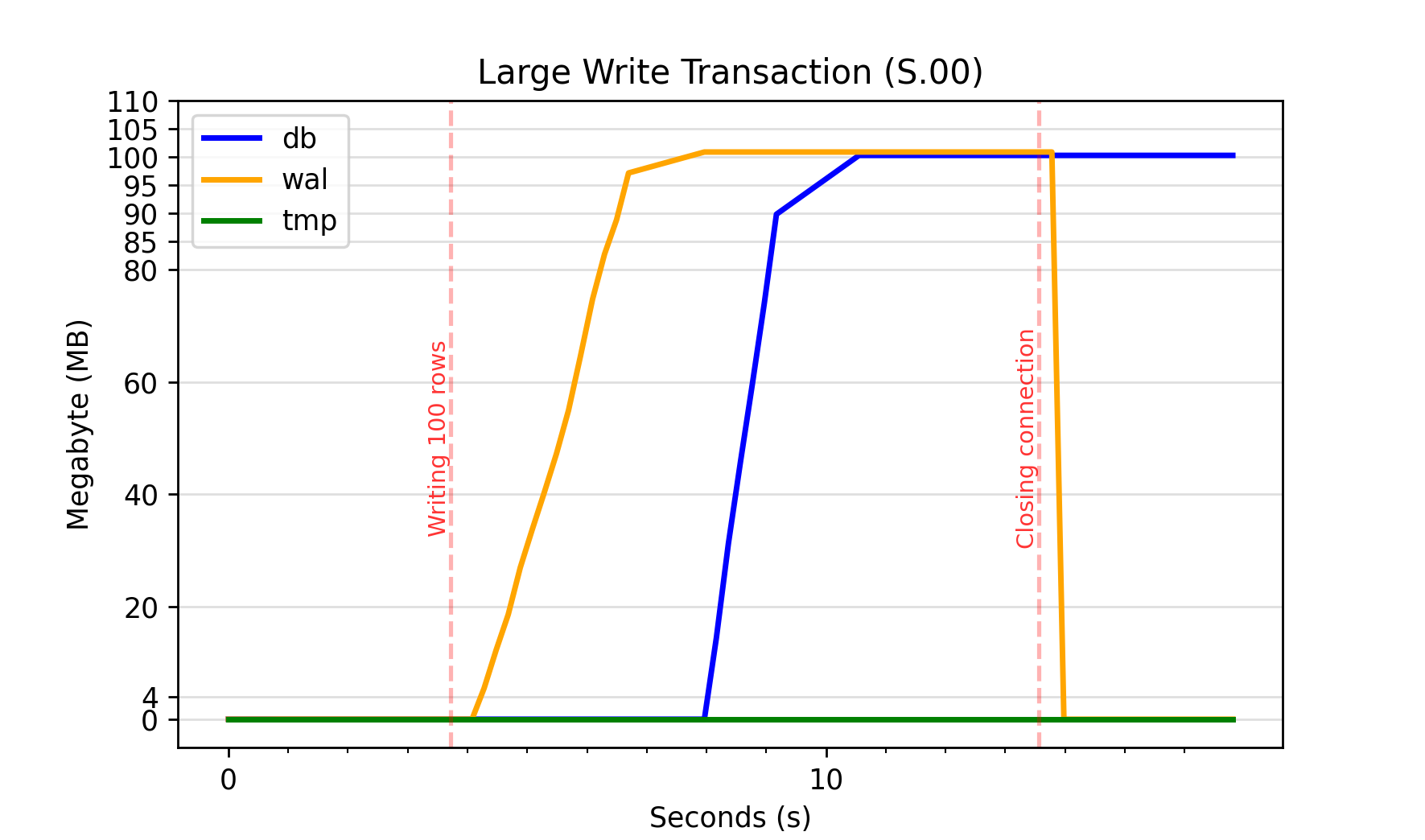

One such way for the number of pages in a WAL file to increase beyond the automatic checkpoint size is by executing a large transaction. The graph S.00 below shows the result writing 100 rows of 1 MB to the database before committing. The WAL file can be seen to grow to 100 MB around the 8 s mark before the database starts increasing to 100 MB after SQLite’s automatic checkpoint occurs.

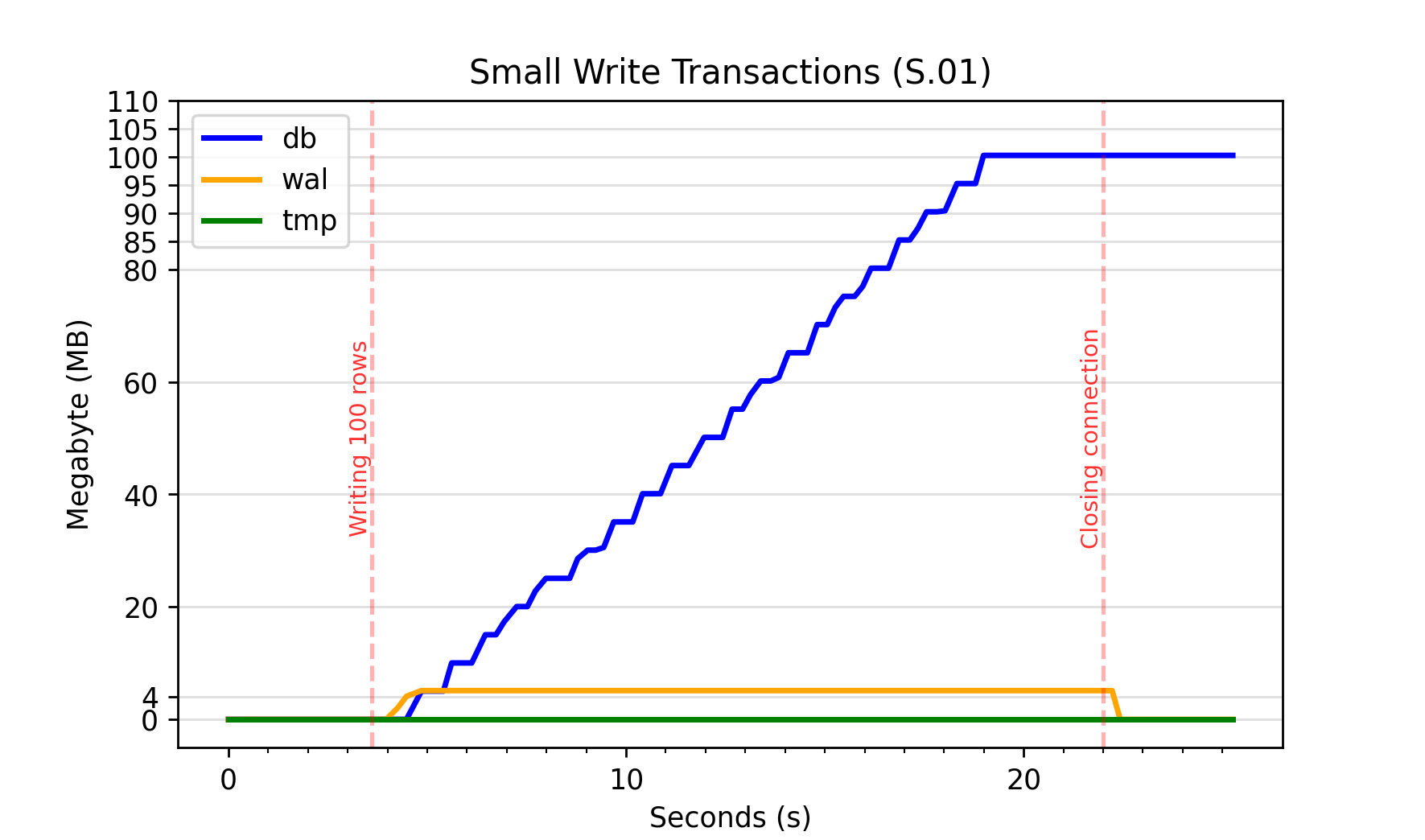

When the same data is written with a commit after each row (1 MB of data), automatic checkpointing is allowed to run without any restriction. S.01 shows the effect of this on file sizes, where the WAL file size can be seen to plateau at around 4 MB.

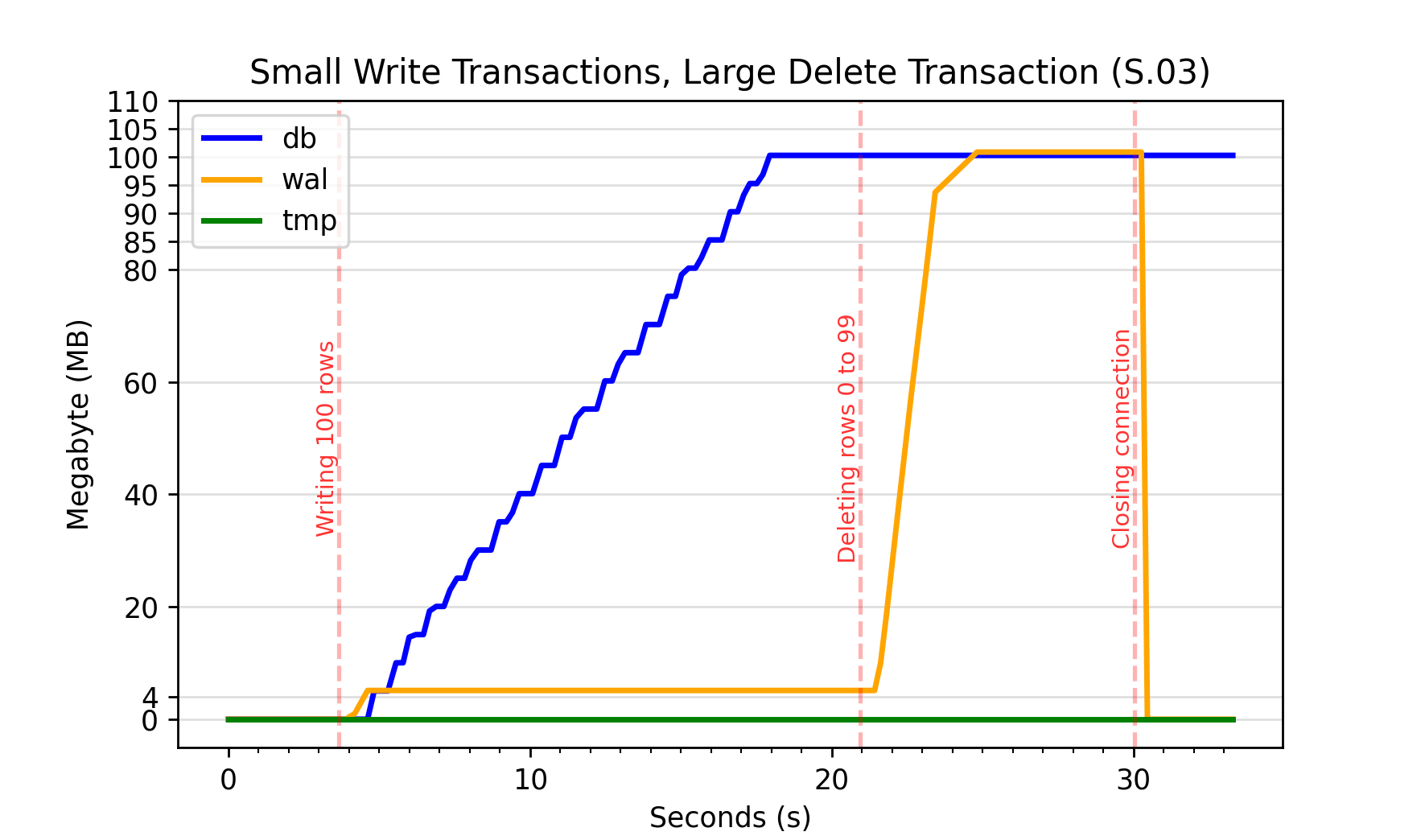

Just to note that it doesn’t matter if that transaction is a delete. A large delete transaction of all 100 rows in the database will also increase the WAL file size to 100 MB. This is shown in the graph depicting S.03. The database was originally populated using small, single row commits. The large delete transaction issued around the 21 s mark results in the WAL file growing from 4 MB to 100 MB.

Reducing WAL File Size

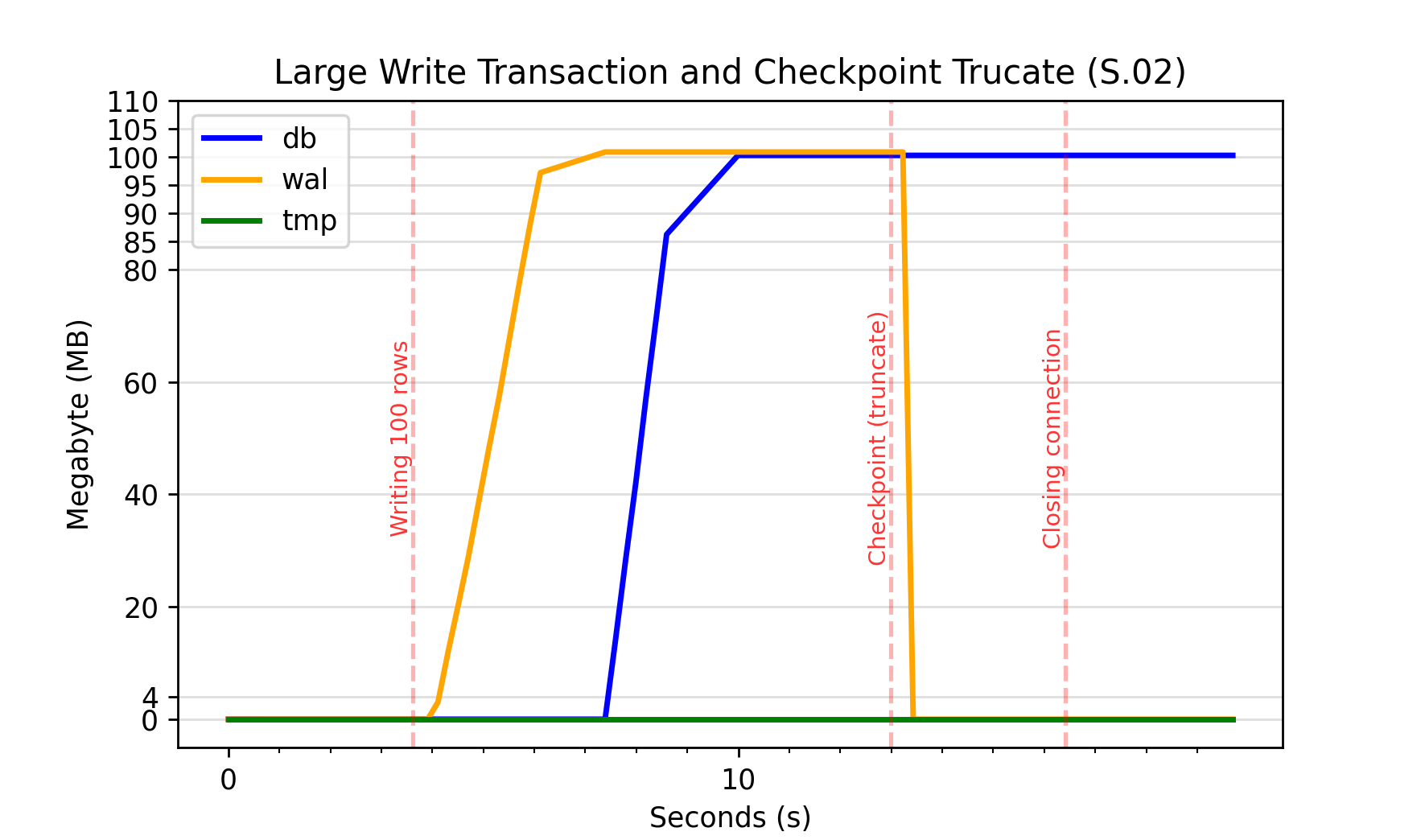

Its possible to use the pragma wal_checkpoint(TRUNCATE) to shrink the WAL file back to 0 MB. The results of this after writing 100 MB to the database in single transaction are shown in graph S.02. All the data appears to have been written the the database file by 10 s. When the checkpoint truncate is issued at around 13 seconds, the WAL file rapidly shrinks from 100 MB to 0 MB.

The vacuum Command

As seen in S.03 above, the database file size does not immediately shrink after a delete. If you desire to reduce the size of a database you need need to request some form of vacuum, of which there are a few “flavours”. This section will focus on the vacuum; command, which to quote the SQLite documentation:

The VACUUM command works by copying the contents of the database into a temporary database file and then overwriting the original with the contents of the temporary file.

Vacuuming an Empty Database

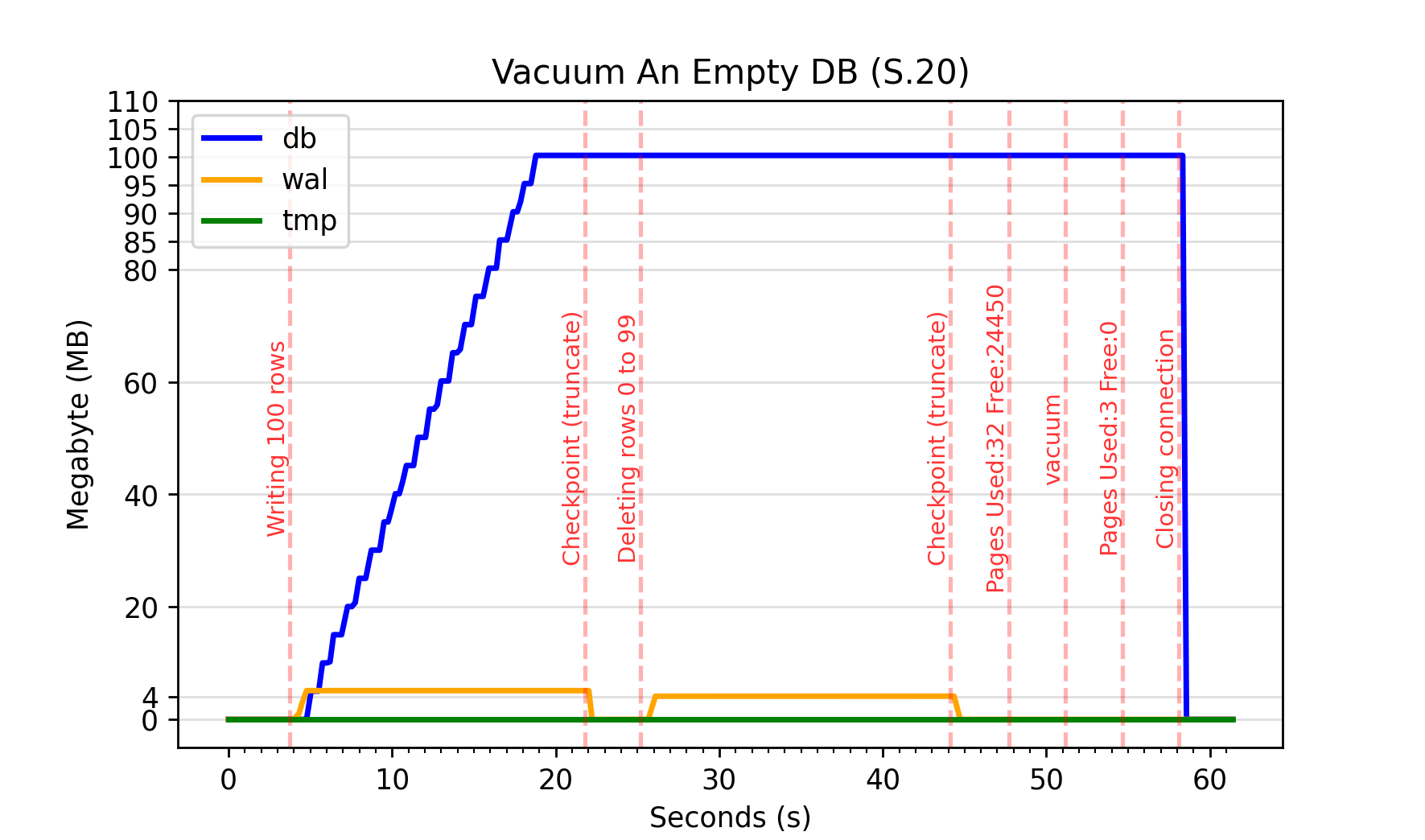

S.20 shows the effect running vacuum on an empty database:

You can see after the delete, the database reports there are 24450 free pages. After the vacuum is issued (51 s) the database reports there are only 3 pages used. However, the database is still clearly around 100 MB size.

This is explained in the SQLite documentation:

When overwriting the original, a rollback journal or write-ahead log WAL file is used just as it would be for any other database transaction.

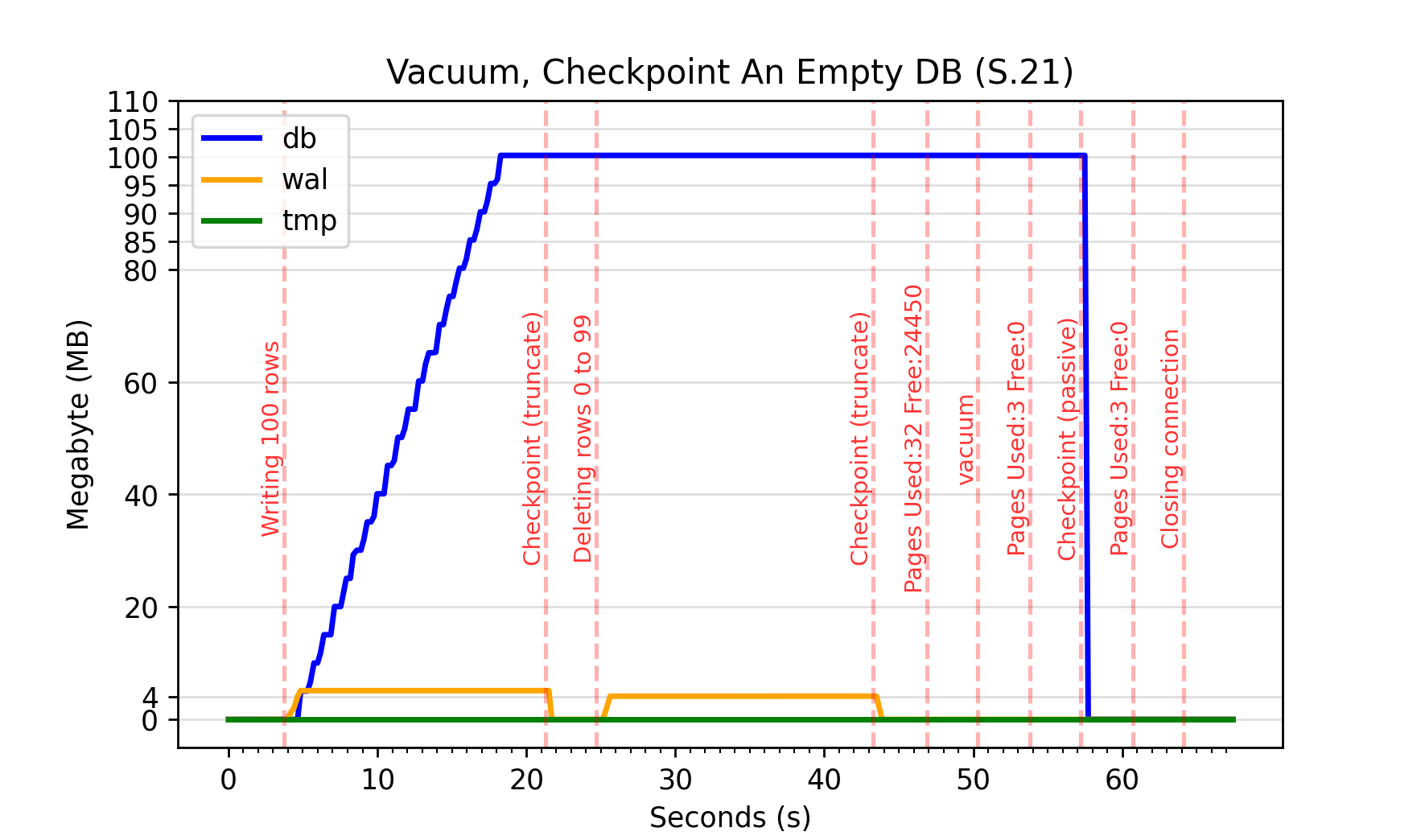

i.e. the database is only going to shrink if a checkpoint is run. Since very little data is actually moved into the WAL file for an empty database, we will need to request one if we want to see an immediate result. We’ve previously used wal_checkpoint() with the “more extreme” truncate argument to shrink the WAL file. Here we can get away with “flushing out” whats in the WAL file to the database. For that we can use the wal_checkpoint(passive) variant. The effect of this can be seen in S.21, where after issuing the checkpoint at around 57 seconds the database size drops to near 0 MB .

Vacuuming a Full Database

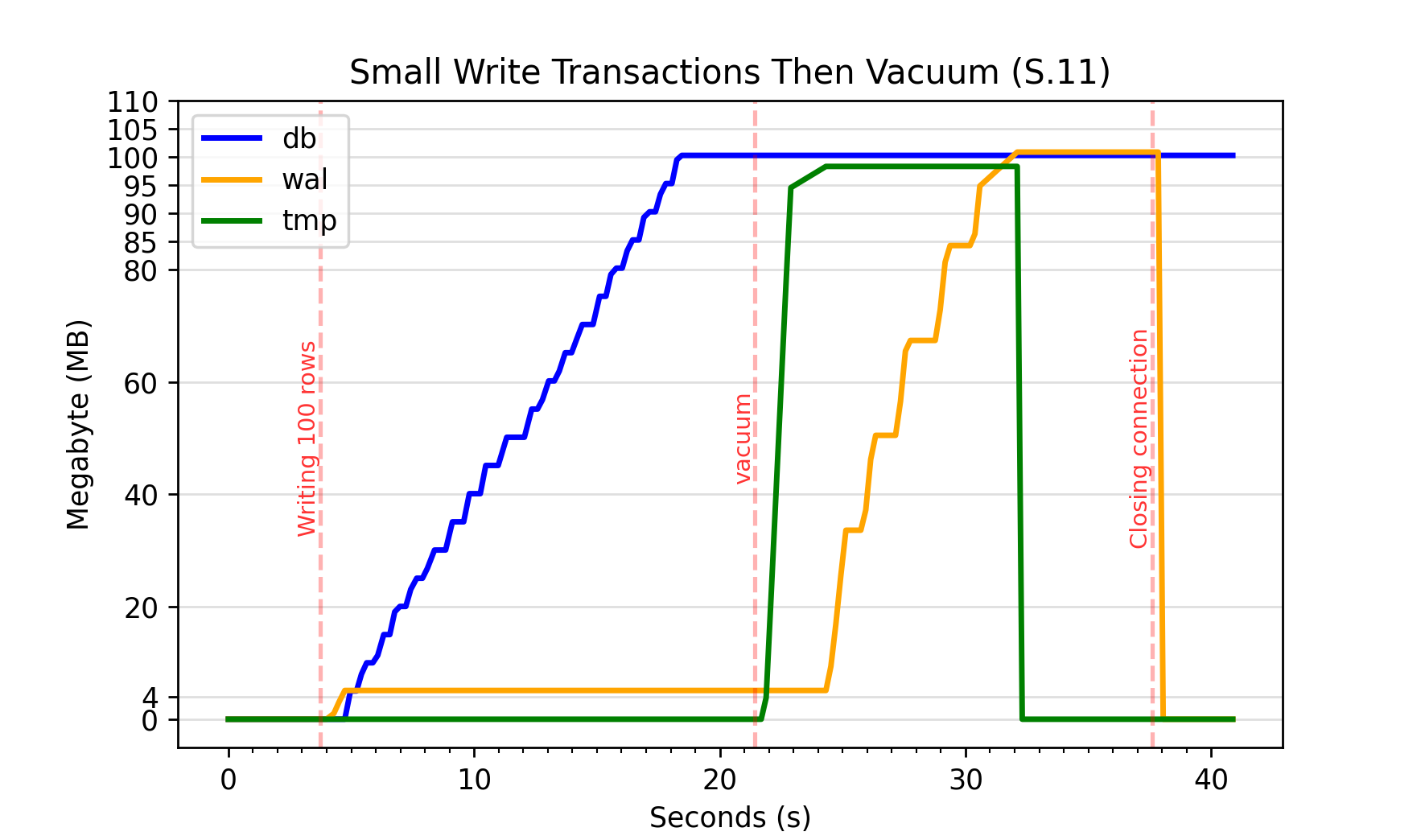

Scenario 11 shows the effect of vacuuming a database containing 100 rows / 100 MB of data. The DB size remains at 100 MB throughout, however the following changes occur to the other SQLite files:

- The temporary directory grows to almost 100 MB (around 24 s)

- The WAL file grows to 100 MB (around 32 s)

- The temporary directory usage shrinks to 0 MB (around 32s)

There is a brief time around the 32 second mark in S.11 where the DB file, WAL file and temporary directory are all approximately 100 MB. An increase in storage usage of 200 % may not be ideal on a device with tight memory constraints.

Credit to the SQLite documentation, it also warns about this scenario:

This means that when VACUUMing a database, as much as twice the size of the original database file is required in free disk space.

incremental_vacuum

There is a second way to explicitly request a DB be vacuumed, and that is via incremental_vacuum.

Unlike a full vacuum, the use of this command requires some forethought as the database needs to be configured to enable its use. The auto_vacuum mode ideally needs to be set to incremental before any tables have been created. Failing that, a full vacuum needs to be executed so SQLite has the opportunity to rebuild its internal data structures.

(Note that it is also possible to let SQLite automatically truncate the database every commit by setting auto_vacuum=full. This has not been profiled here).

Unlike a vacuum, an incremental_vacuum does not create a temporary copy of the database. It moves frees pages to the end of the database then truncates the database. This should offer a faster way of getting a space saving. However this is not without trade offs.

From pragma auto vacuum documentation:

Note, however, that auto-vacuum only truncates the freelist pages from the file. Auto-vacuum does not defragment the database nor repack individual database pages the way that the VACUUM command does

From vacuum documentation:

In some cases, VACUUM may also reduce the number of partially filled pages in the database, reducing the size of the database file further.

When invoked without arguments, or when provided with an argument less than one, incremental_vacuum, will attempt to remove all free pages. In the following text, this will be referred to as an “entire” incremental vacuum. A positive integer argument to incremental_vacuum specifies the number of pages to be removed from the freelist. The remainder of this post will refer to this as a “granular” incremental vacuum.

One gotcha with incremental_vacuum (which I ran into) is the user needs to heed the return from sqlite3_step() (or in the Python case just issue fetchall()). Each step of an incremental vacuum will only free one page at a time.

https://stackoverflow.com/a/56412002

Entire incremental_vacuum

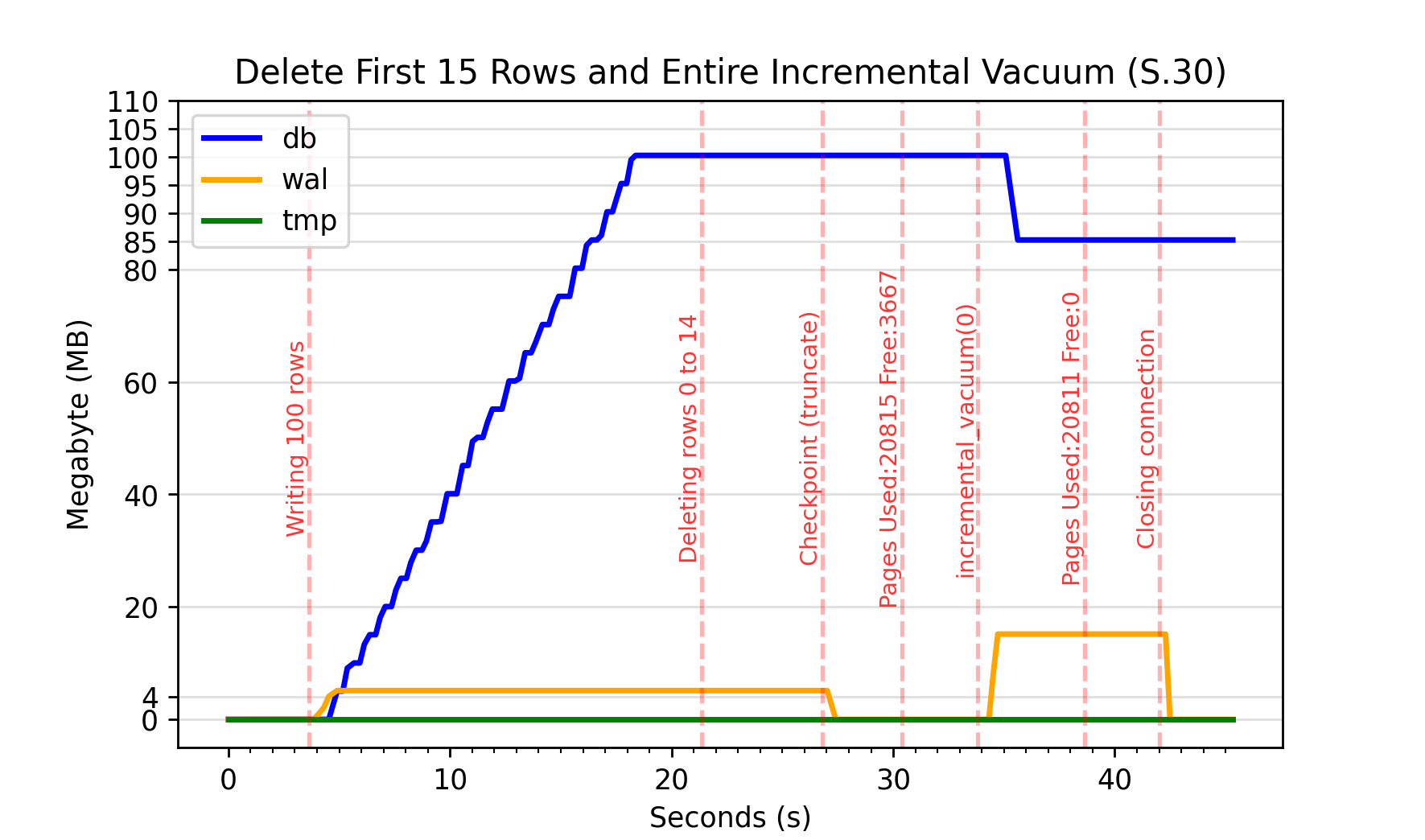

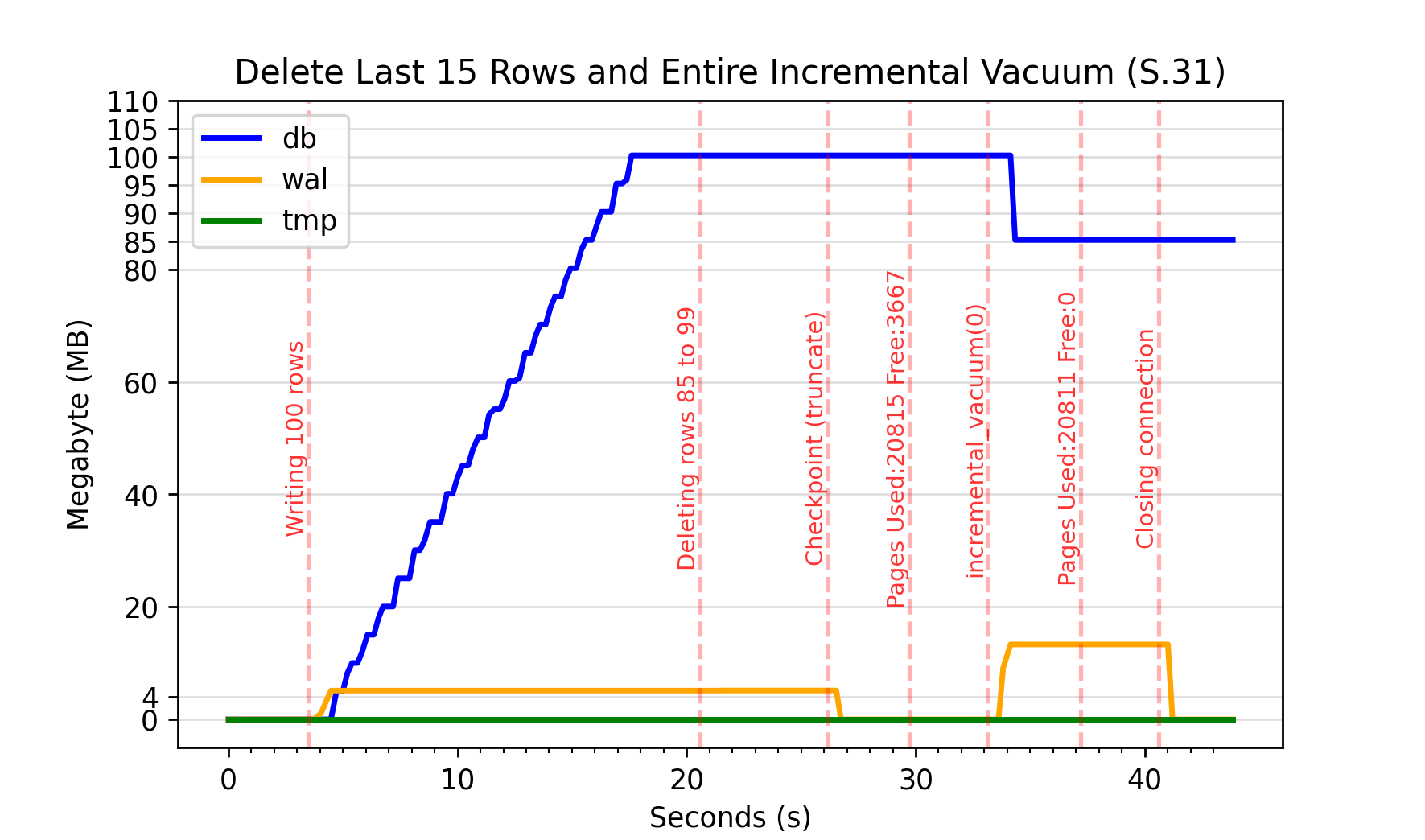

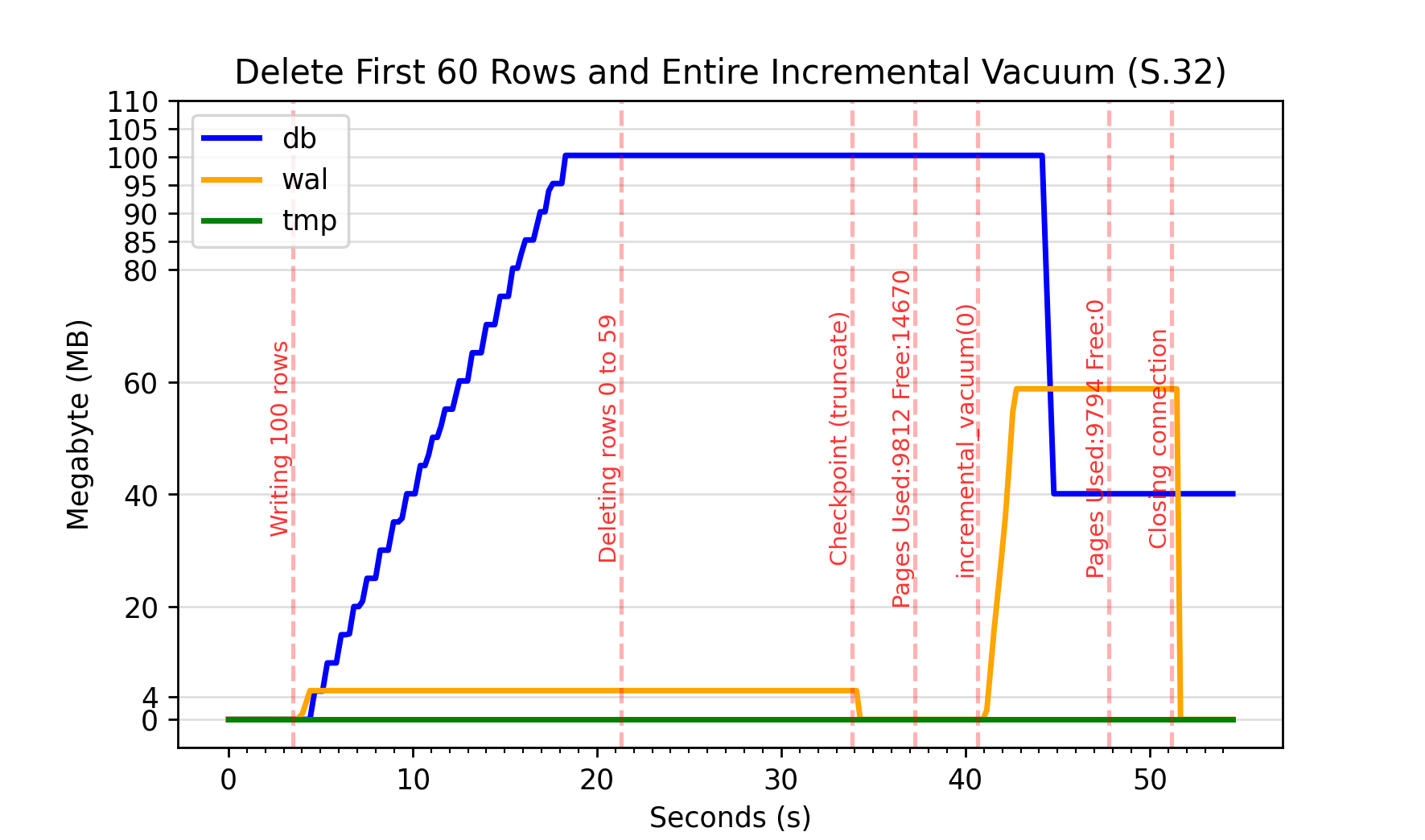

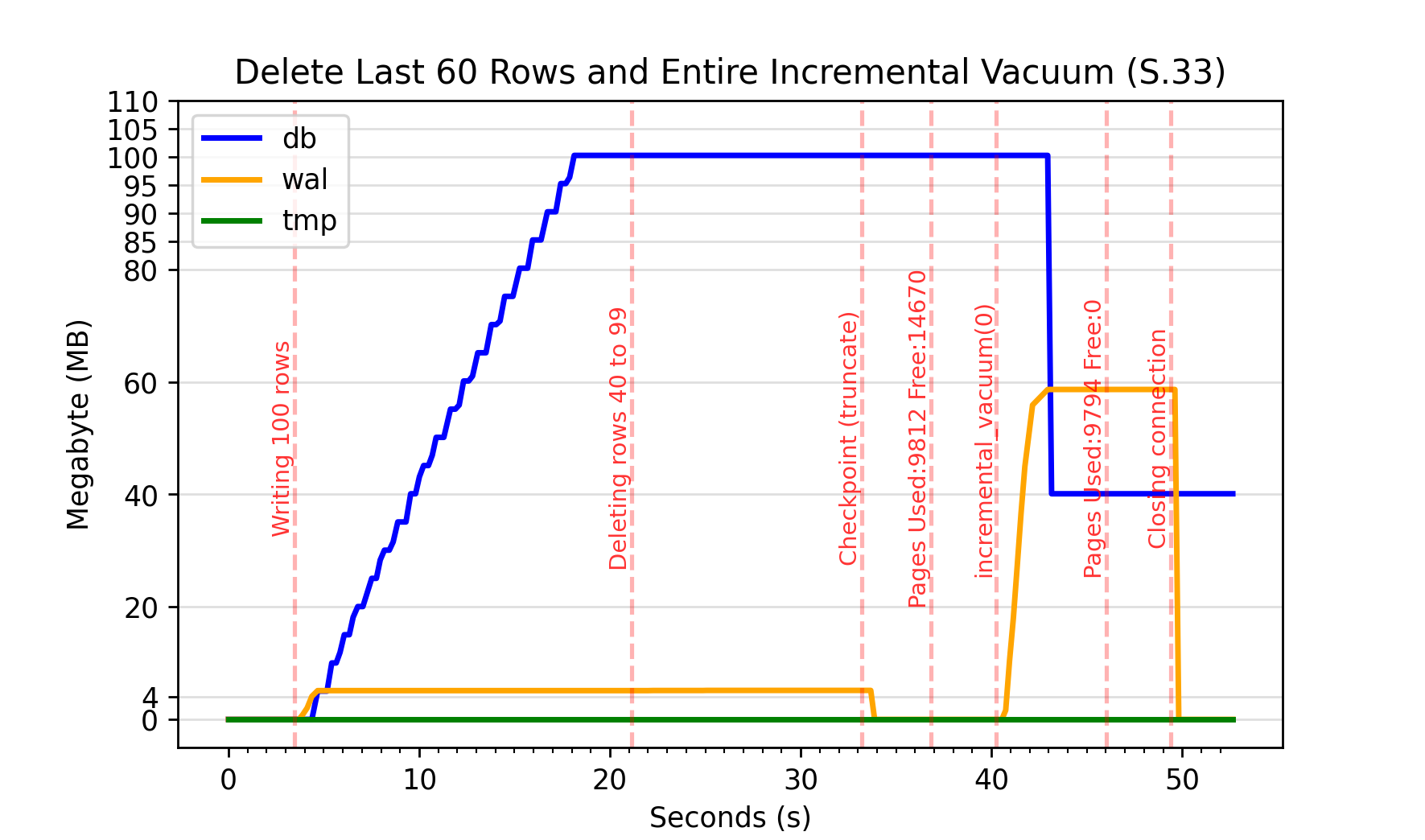

The graphs below show how the file sizes change when running an entire incremental vacuum on a database with a varying amount of free pages. All databases were originally populated with 100 MB of data before different numbers of rows were deleted. In some cases the rows deleted were the first inserted, which are assumed to be near the start of the database file. In others the last inserted rows were deleted, which should have been near the end of the file.

In each of the cases, you can see the database shrinks in size by approximately the expected amount, i.e. 15 rows deleted sees a 15 MB reduction in S.30 and S.31. What I found surprising is the increase in the WAL file size. In Scenarios 30-34, the WAL file grows to approximately the same size as the deleted data.

The graphs for S.32 and S.33 show near identical behaviour for an entire incremental vacuum ran after deleting the first and last 60 rows in the database respectively. It doesn’t matter that in S.33 that the vast majority of the pages at the end of the database are presumably already free pages. I would have expected there would be less reordering of pages required in S.33 in order to truncate the database, and a smaller WAL file size would be observed compared to S.32.

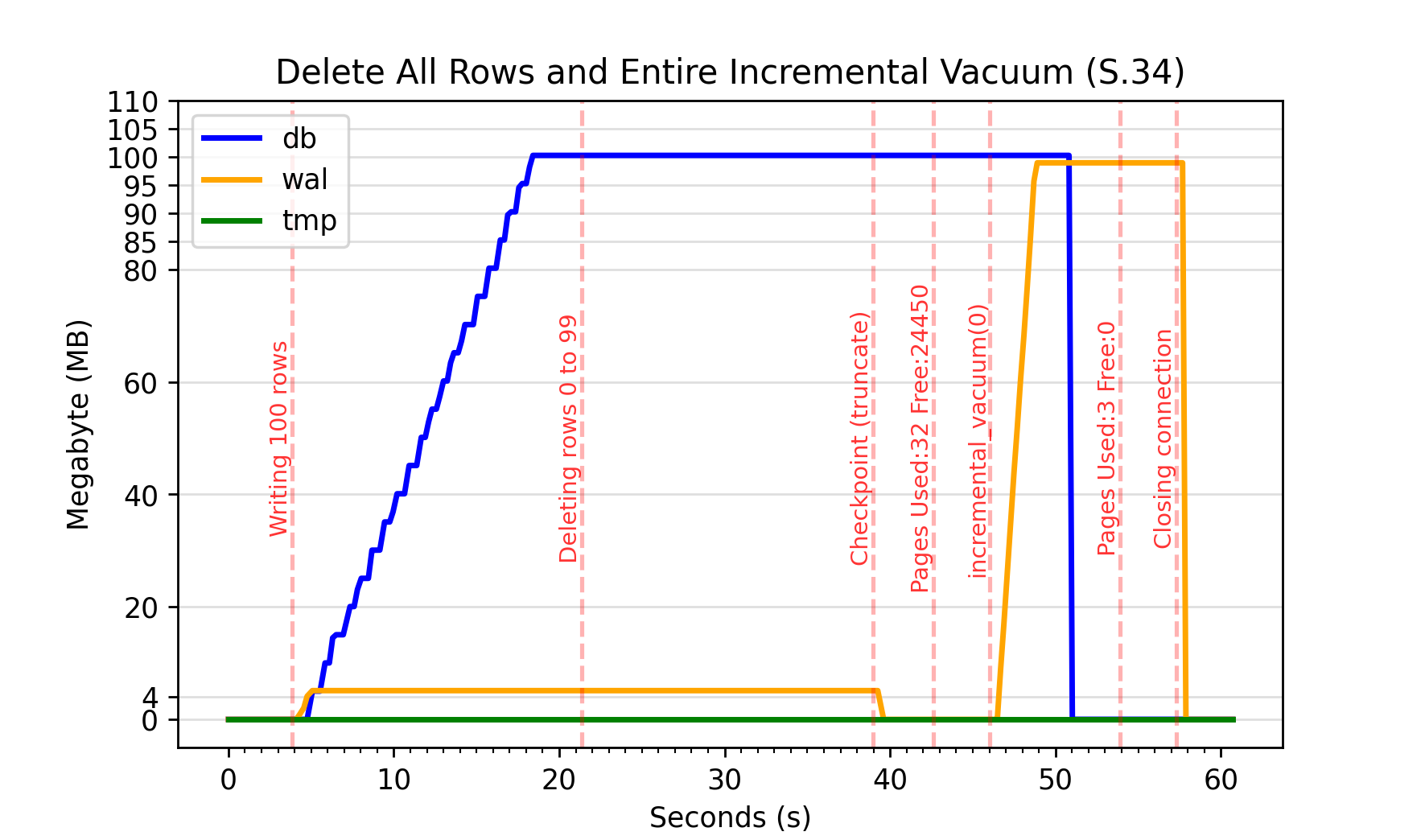

This behaviour is most evident in the graph of S.34 where all 100 rows have been deleted. The WAL file increases to near 100 MB at around 49 seconds before the DB starts being truncated due to an automatic checkpoint.

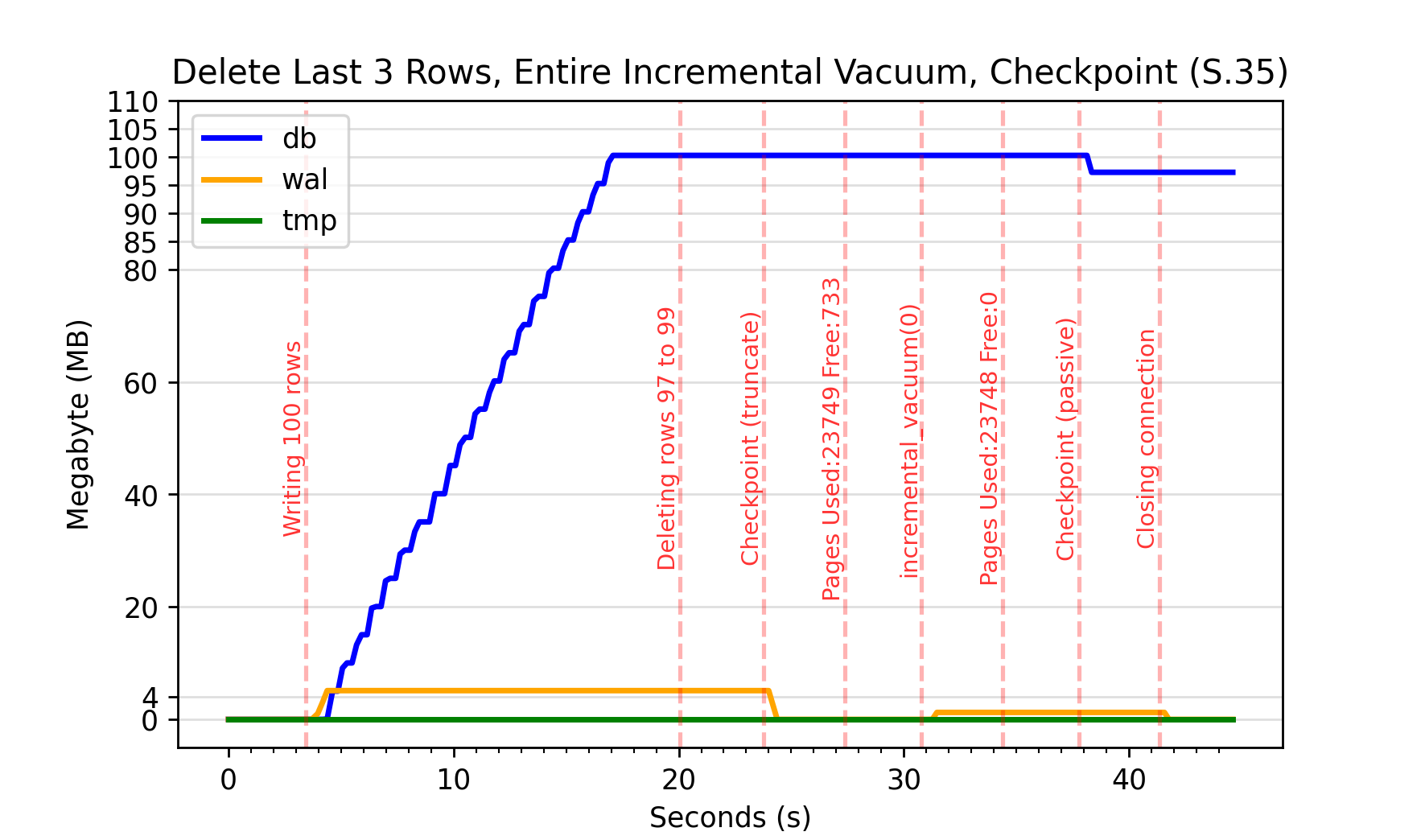

Scenario 35 appears to show an exception to this. S.35 was initially added to prove an entire incremental vacuum requires a checkpoint in order to truncate the database. This is evident by the database file size remaining stable after the entire incremental vacuum is issued at 31 s, but shrinking following an explicit checkpoint at around 38 s. However, this graph also also shows that while the entire incremental vacuum results in a truncation of the DB of just under 3 MB, the corresponding increase in WAL file size is only approximately 1.3 MB. This suggests SQLite isn’t consistently pushing all the free pages through the WAL file. See below for some additional observations on this.

Granular incremental_vacuum(N)

In the following scenarios, incremental vacuum has been provided with a positive integer argument to limit the number of free pages removed at a time.

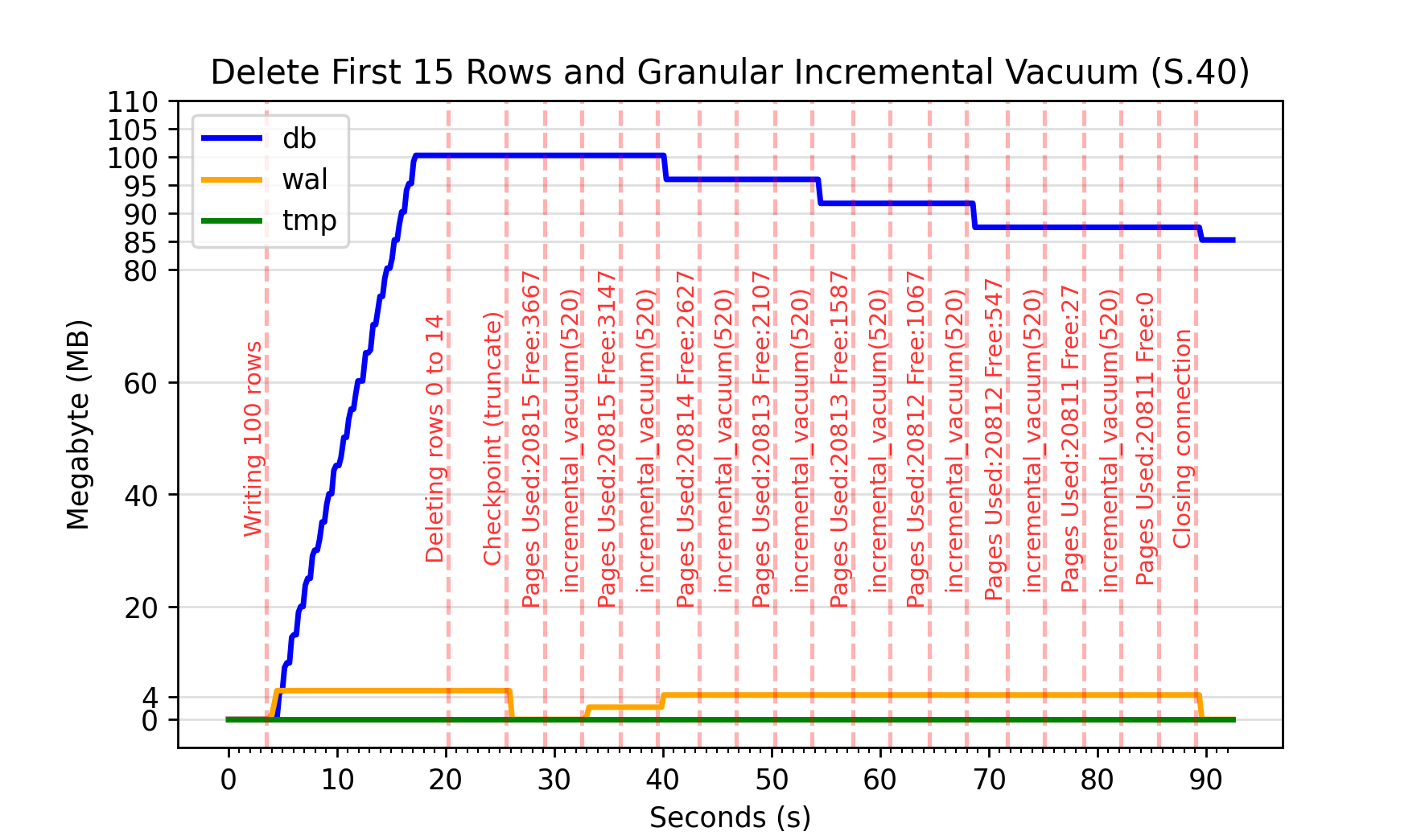

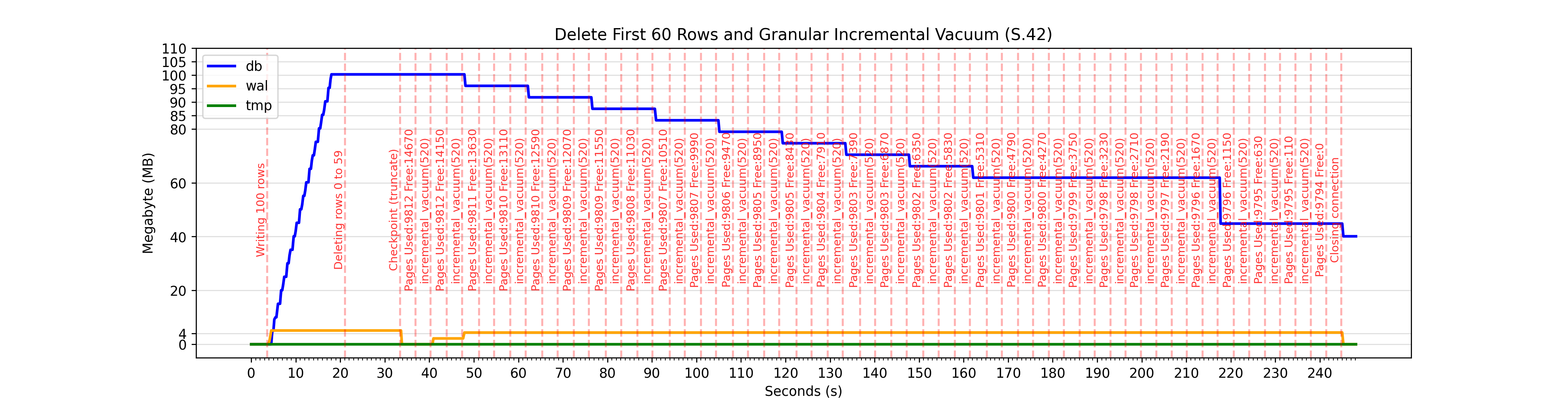

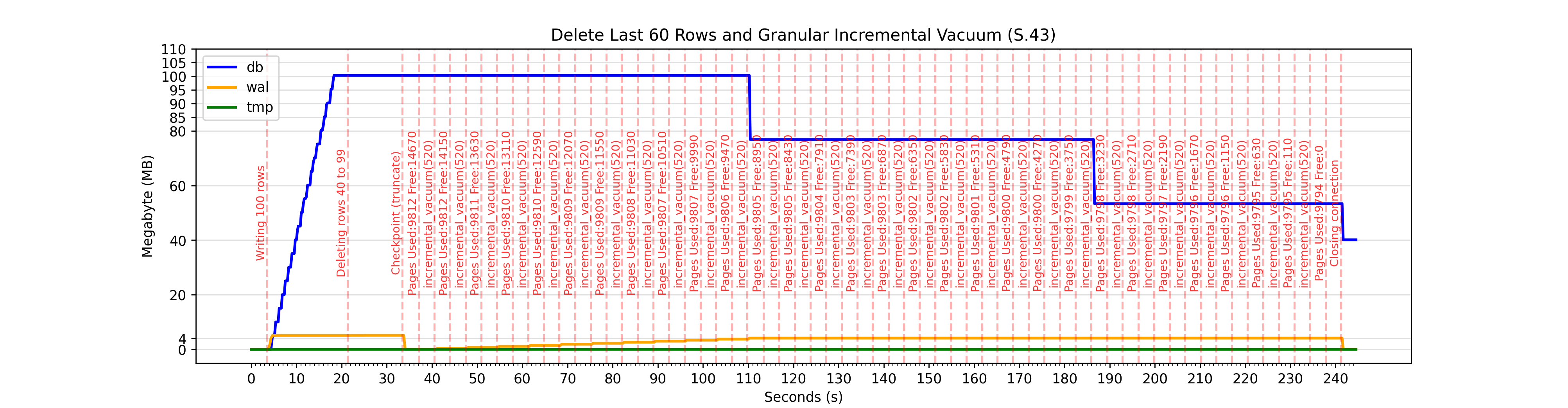

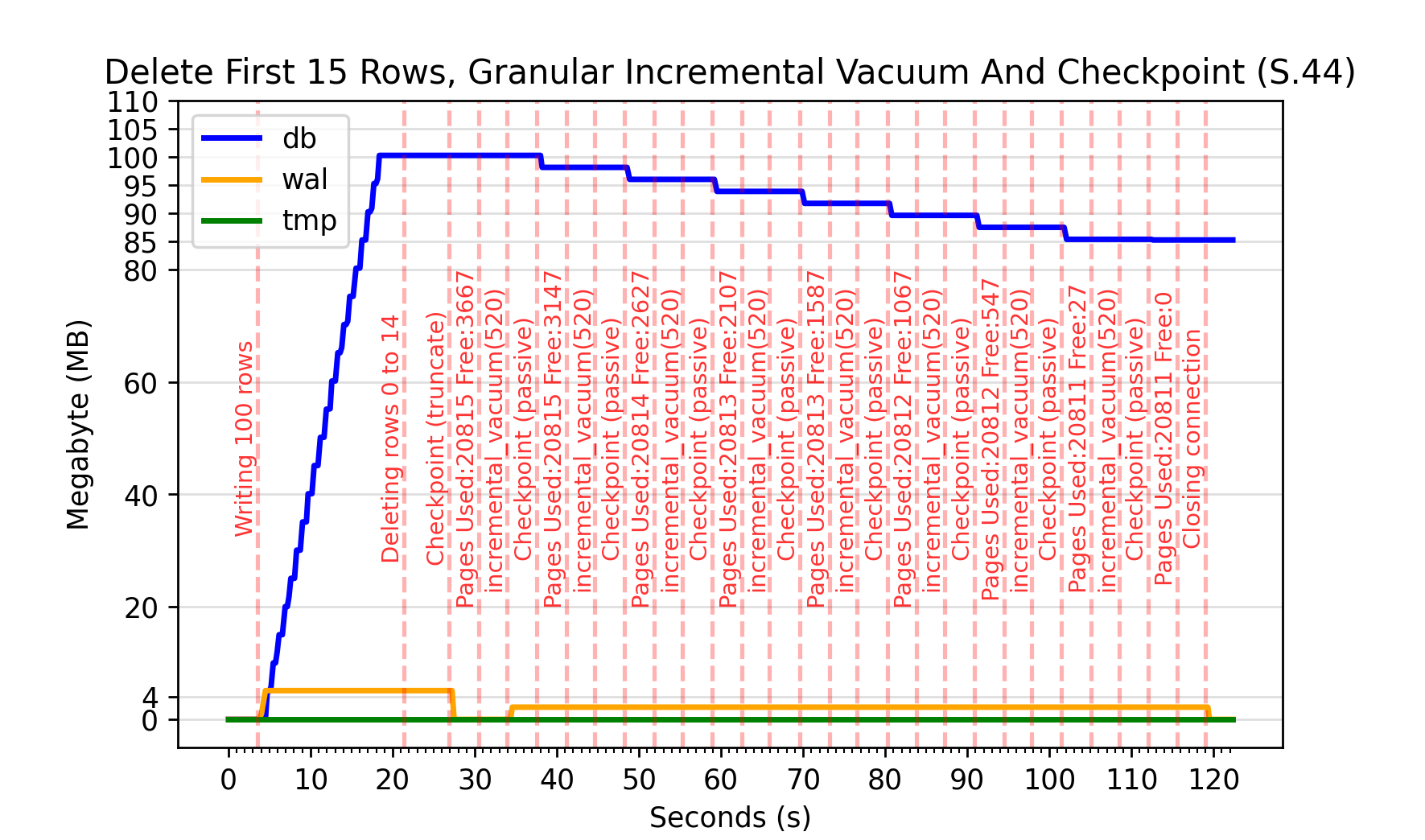

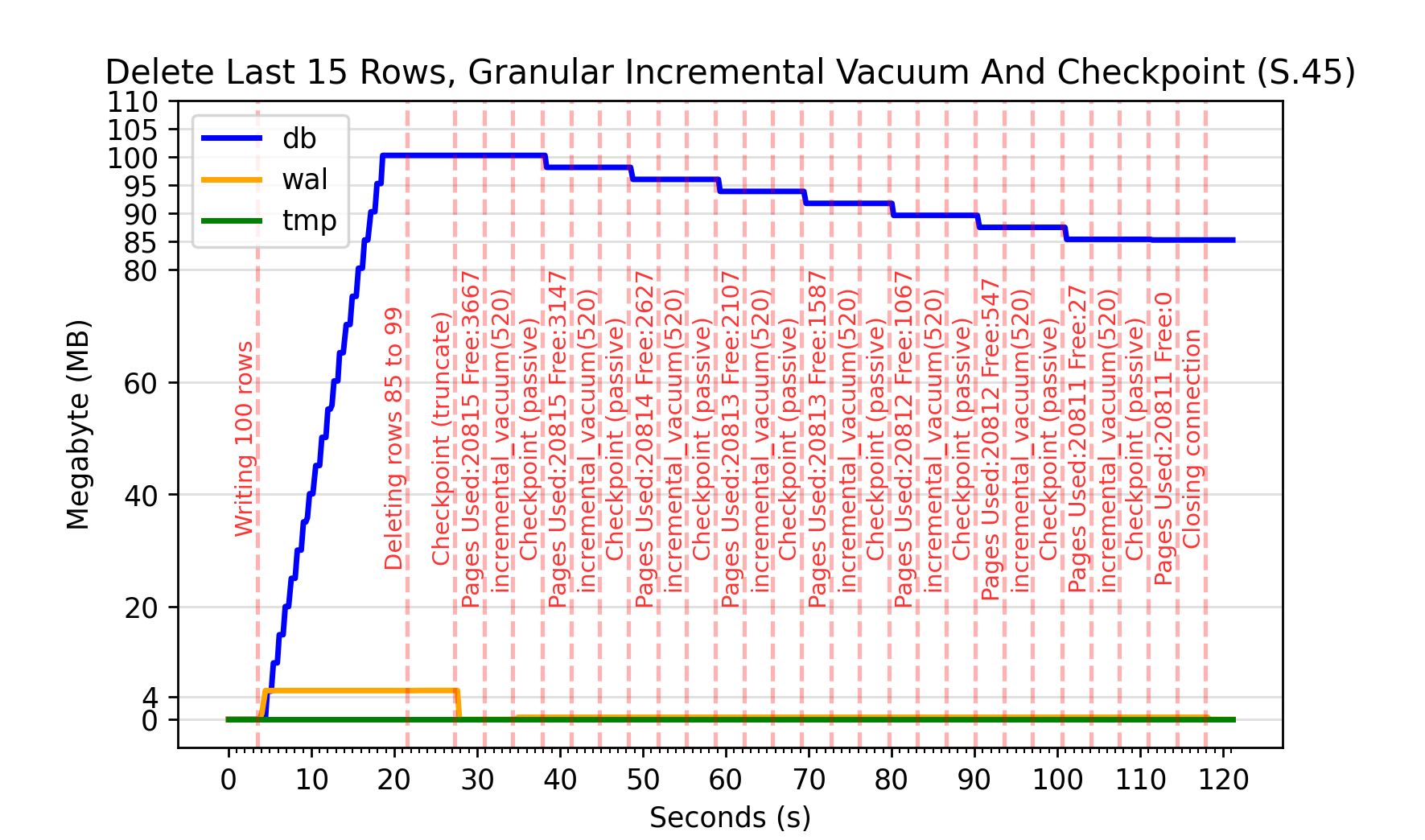

In Scenarios 40-45 the number of pages to be freed in a single invocation of incremental_vacuum has been set to 520, or approximately 2.1 MB. This is to try and balance keeping the WAL file size near 4 MB through automatic checkpointing, while keeping the graph uncluttered. This has worked with restricting the WAL file size at least; not growing significantly over 4 MB in any scenario.

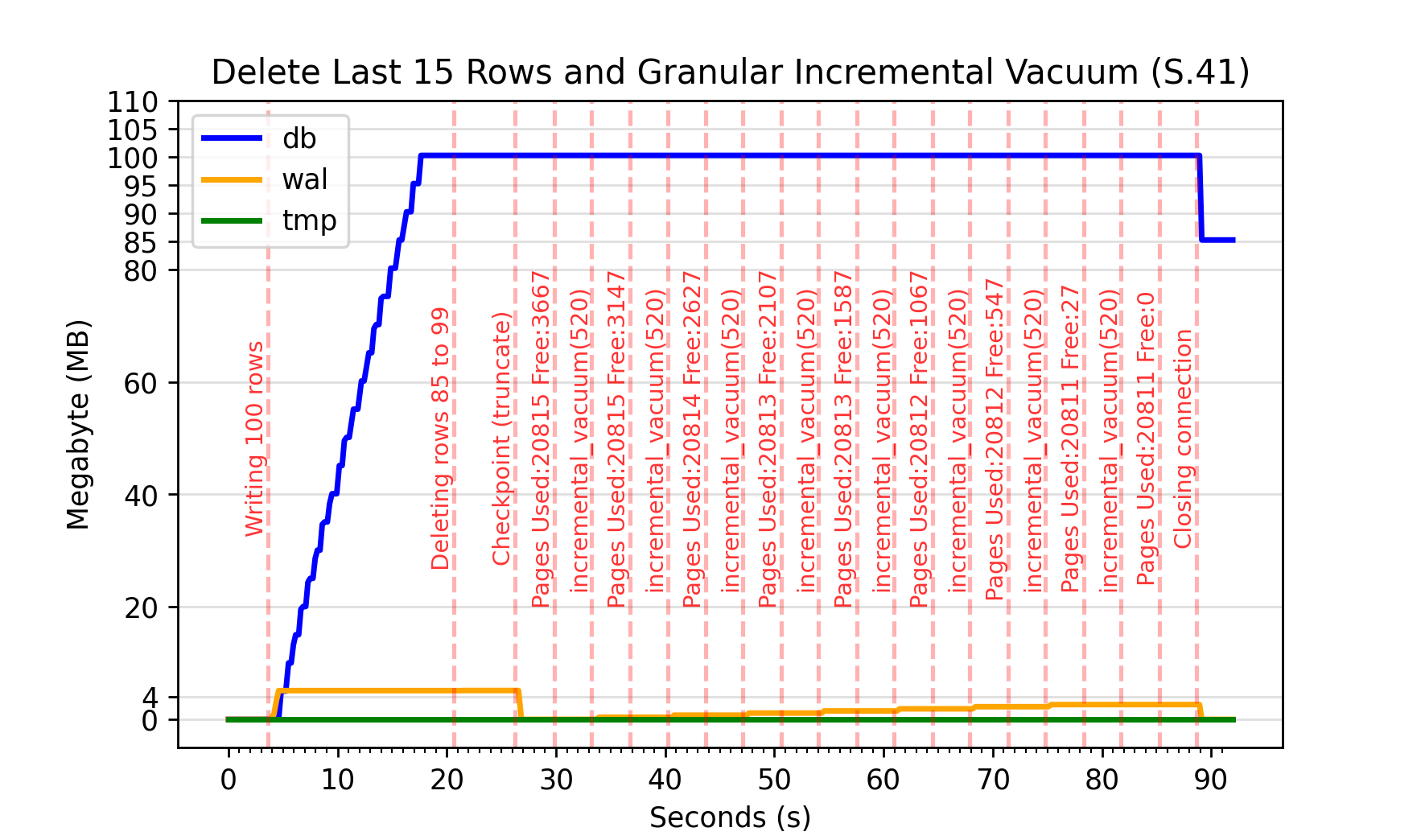

There is an interesting difference in the behaviour of a granular incremental vacuum after deleting rows which should be found near the start of the database file compared to those found near the end of the file. This can be observed by comparing S.40 with S.41, and S.42 with S.43.

Looking at Scenario 42 and 43 in more detail, it can be seen that after 60 rows were deleted from the database there were 14,670 free pages in each database.

In S.42, where the first 60 rows of the database have been deleted, the DB has been truncated in steps of approximately 4 MB at a time. This first occurs at around 48 s, and can be observed happening every two calls to incremental_vacuum(520). This would suggest that after two incremental vacuums requesting 2.1 MB of pages be freed, just over 4 MB of data has been inserted into the WAL file, triggering an automatic checkpoint.

In the graph of S.43, where the last 60 rows have been deleted, the DB is being truncated in steps of approximately 22 MB. The first occurrence of which is at 111 s. This truncation is happening after 11 calls to incremental_vacuum(520). Here it can be inferred that the automatic checkpoint threshold of 1000 pages (4 MB) is passed sometime after 5200 (520 * (11-1)) pages (which is 21.3 MB) have been incrementally vacuumed.

I would speculate this difference in behaviour is due to most of the free pages already being at the end of the database in S.43. This allows SQLite to dispose of them almost immediately when truncating the database file. In order to truncate the database in S.42, the used pages at the end of the file need to be moved to replace the newly freed pages which should exist at the start of the file.

Inconsistency in Incremental Vacuums

There appears to be an inconsistency in a granular incremental vacuum vs an entire incremental vacuum, for which I don’t currently have an explanation. EDIT: I reached out on the SQLite forums and got the following answer for anyone interested.

Consider the graphs of S.31 and S.41. In each, the last 15 rows of the database have been deleted. There should be 15 MB (or close to it) of free pages already at the end of the database. In S.31 the WAL file grows by 15 MB during the entire incremental vacuum. The size of the DB shrinks by 15 MB after this operation, which suggests that the automatic checkpoint was triggered.

In S.41, where granular incremental vacuums are repeatedly eating away at the free page count, the WAL file can be seen slowly increasing in size until the 86 s mark, where it is approximately 2.6 MB. At this point, all 15 MB have been “freed”. When the connection closes the database file is truncated, as the WAL file has never grown enough to cause to cause an automatic checkpoint.

I think its a safe bet to assume an incremental_vacuum(0) is executing the same SQLite code as incremental_vacuum(N), so I hypothesised this behaviour must be dependant on the number of pages requested to be freed.

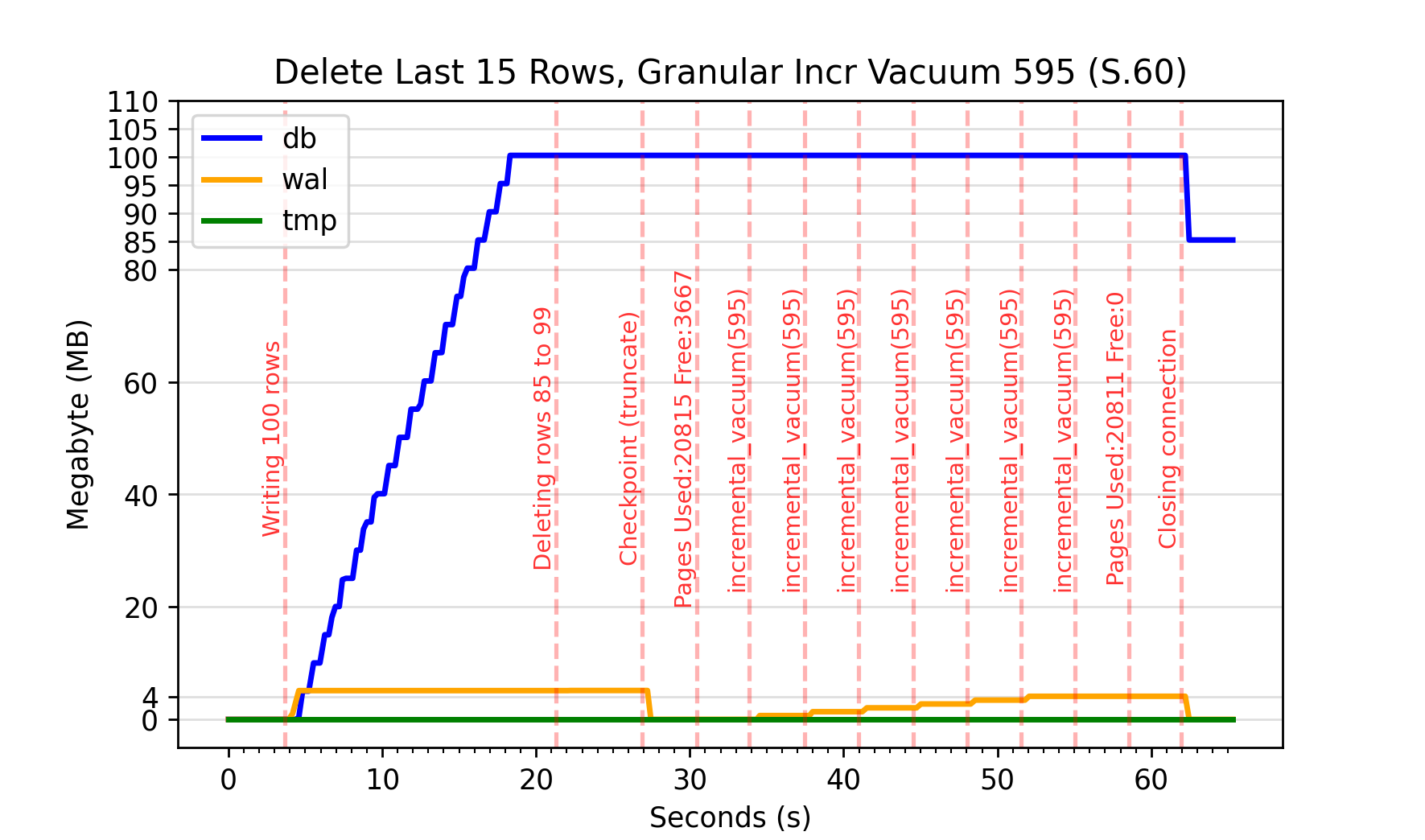

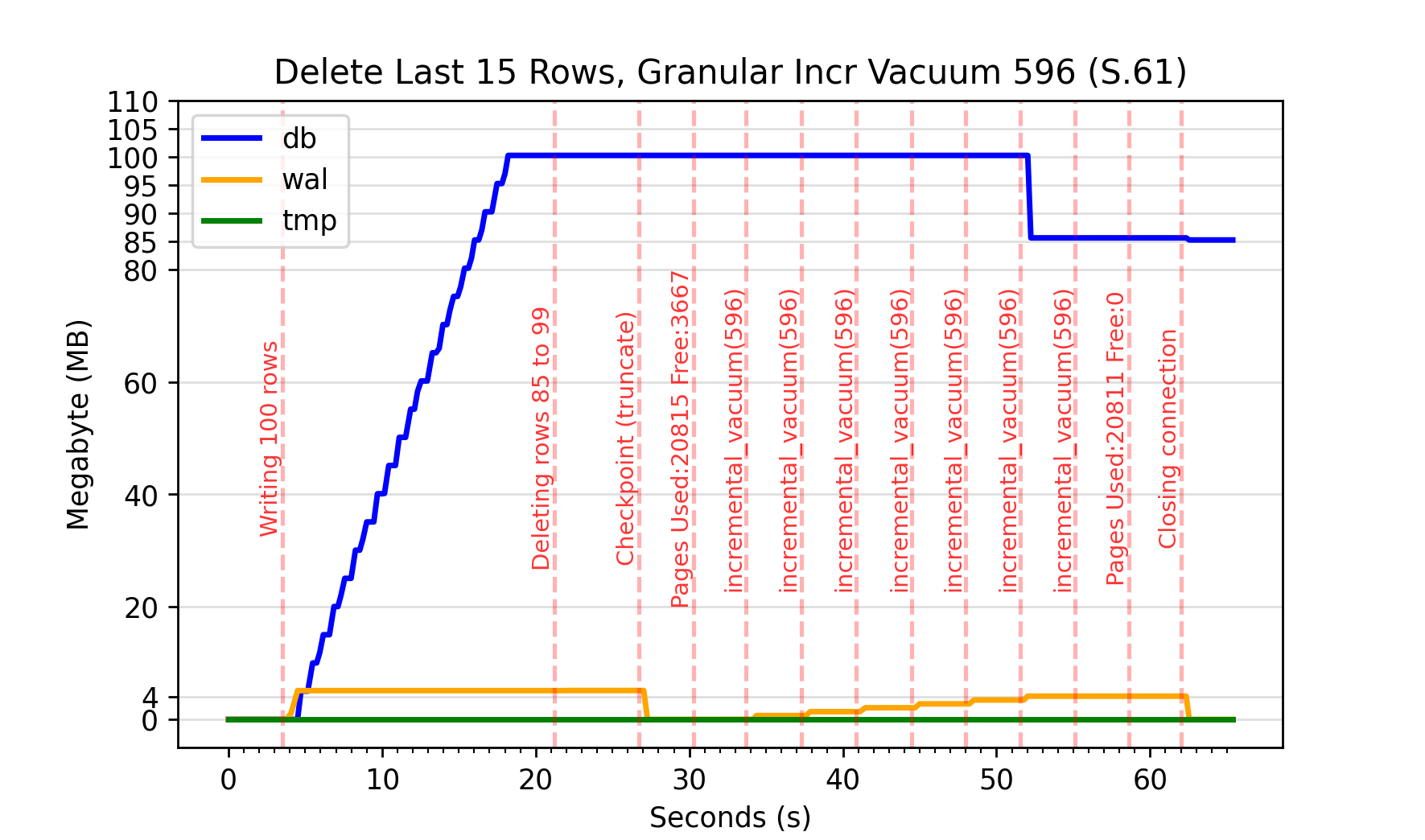

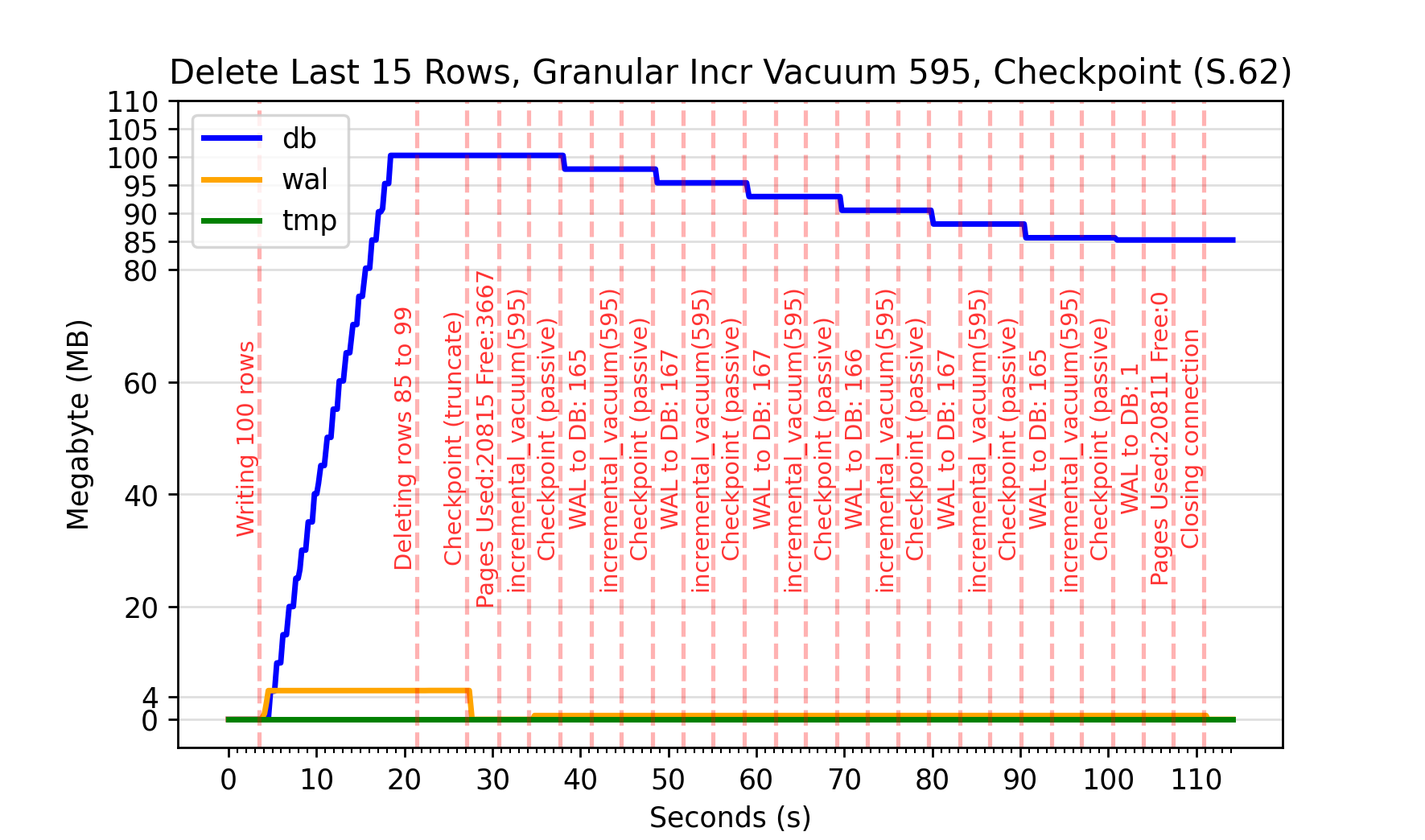

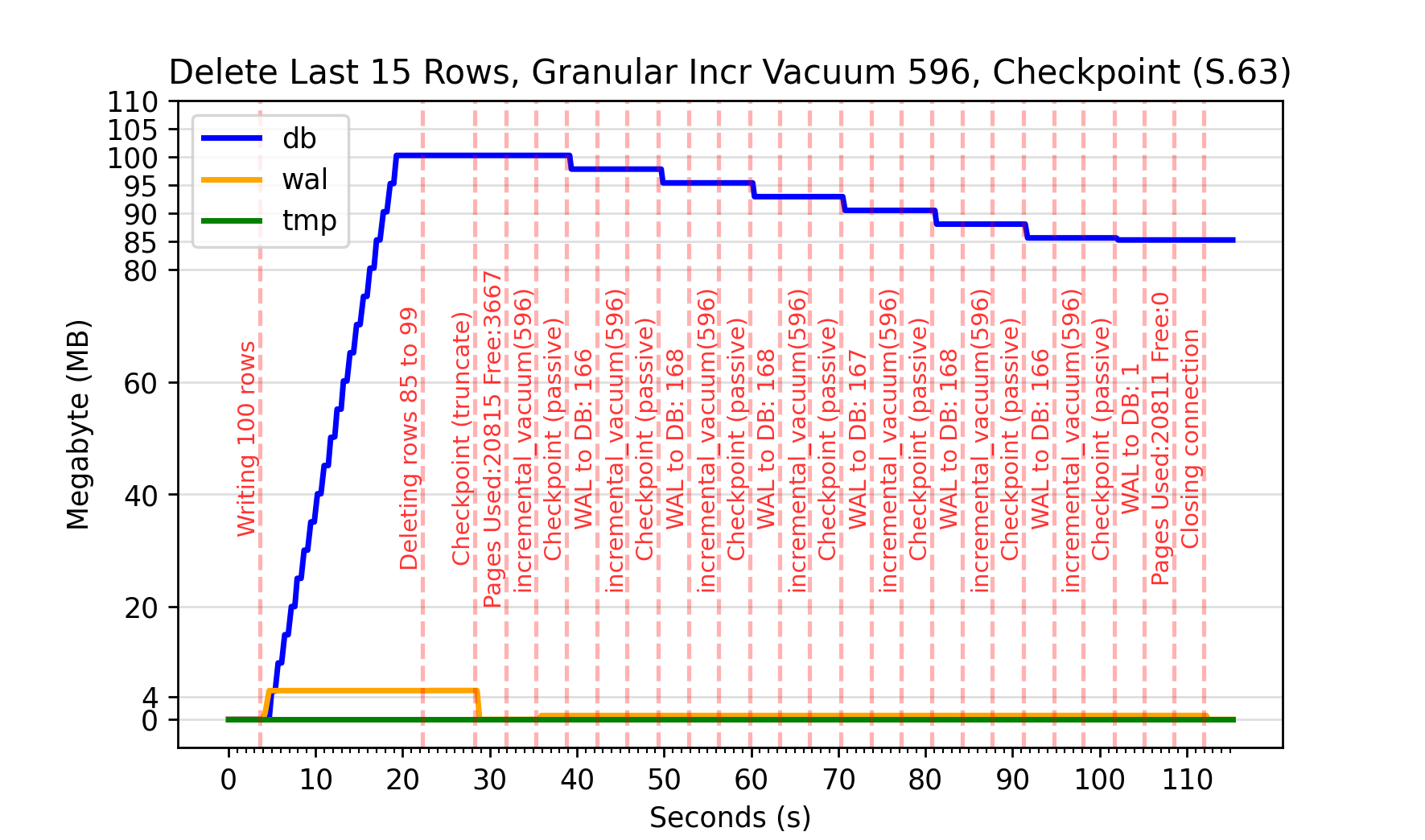

Focusing on the scenario where the last 15 rows have been deleted from the database, I have produced scenarios 60 - 65 which try to probe the impact providing different arguments to incremental_vacuum(N) has on the WAL file.

By throwing numbers against the wall to see what stuck, it was determined that repeatedly requesting an incremental_vacuum(595) until all pages had been freed would not cause an automatic checkpoint, but repeatedly requesting incremental_vacuum(596) would. This is observable in S.60 and S.61 respectively. While ultimately the same number of pages were being removed from the freelist (3667) it appears more data was being moved into the WAL file when the incremental_vacuum request was larger.

SQLite will provide the number of pages written to the WAL file, and from the WAL file to the DB after an explicit call to wal_checkpoint(passive). S.60 and S.61 were repeated with a checkpoint invoked between each call to incremental_vacuum(N). This is shown in S.62 and S.63 respectively. In both cases, the number of pages written to the WAL was the same as the number moved from the WAL to the DB, so only “WAL to DB” is logged on the graphs.

I was concerned the addition of a checkpoint would change the behaviour of the test. For a start, the WAL file will never grow to 1000 pages and cause an automatic checkpoint. However, when the the counts of the pages moved from the WAL are tallied (below) this doesn’t appear to have impacted the pages added to the WAL file during the tests.

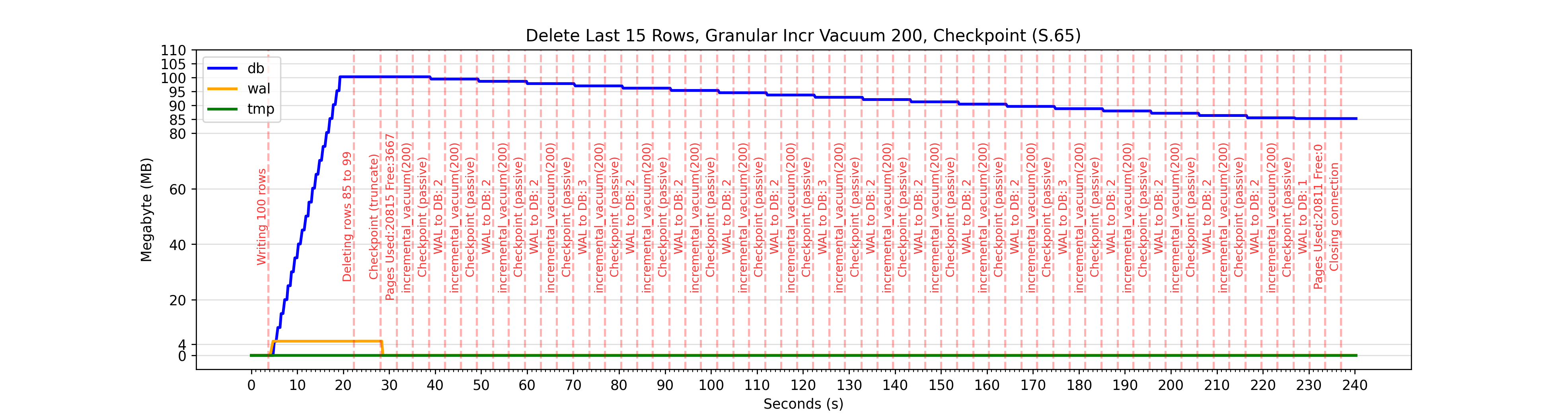

I have also included the graph of S.65, which logs the additional page information for a smaller granular incremental vacuum size of 200 pages.

When freeing 200 pages at a time in S.65, a total of 40 (3*2 + 3 + 4*2 + 3 + 4*2 + 3 + 4*2 + 1) pages are moved into the WAL file.

When freeing 595 pages at a time in S.62, a total of 998 (165 + 2*167 + 166 + 167 + 165 + 1) pages are moved into the WAL file.

When freeing 596 pages at a time in S.63, a total of 1004 (166 + 2*168 + 167 + 168 + 166 + 1) pages are moved into the WAL file.

It appears that when attempting to remove a fixed number of pages from the freelist with incremental_vacuum(N), choosing a smaller value of N will result in fewer pages being written to the WAL file during the process. As the value of N is increased, the number of pages moved into the WAL file appears to grow close to the number of free pages. This was originally seen above when executing an entire incremental vacuum (N=0) in S.31. Of course, reducing the number of pages removed in a single invocation of incremental_vacuum(N) will result in more calls to incremental_vacuum(N) being required to to completely clear the freelist.

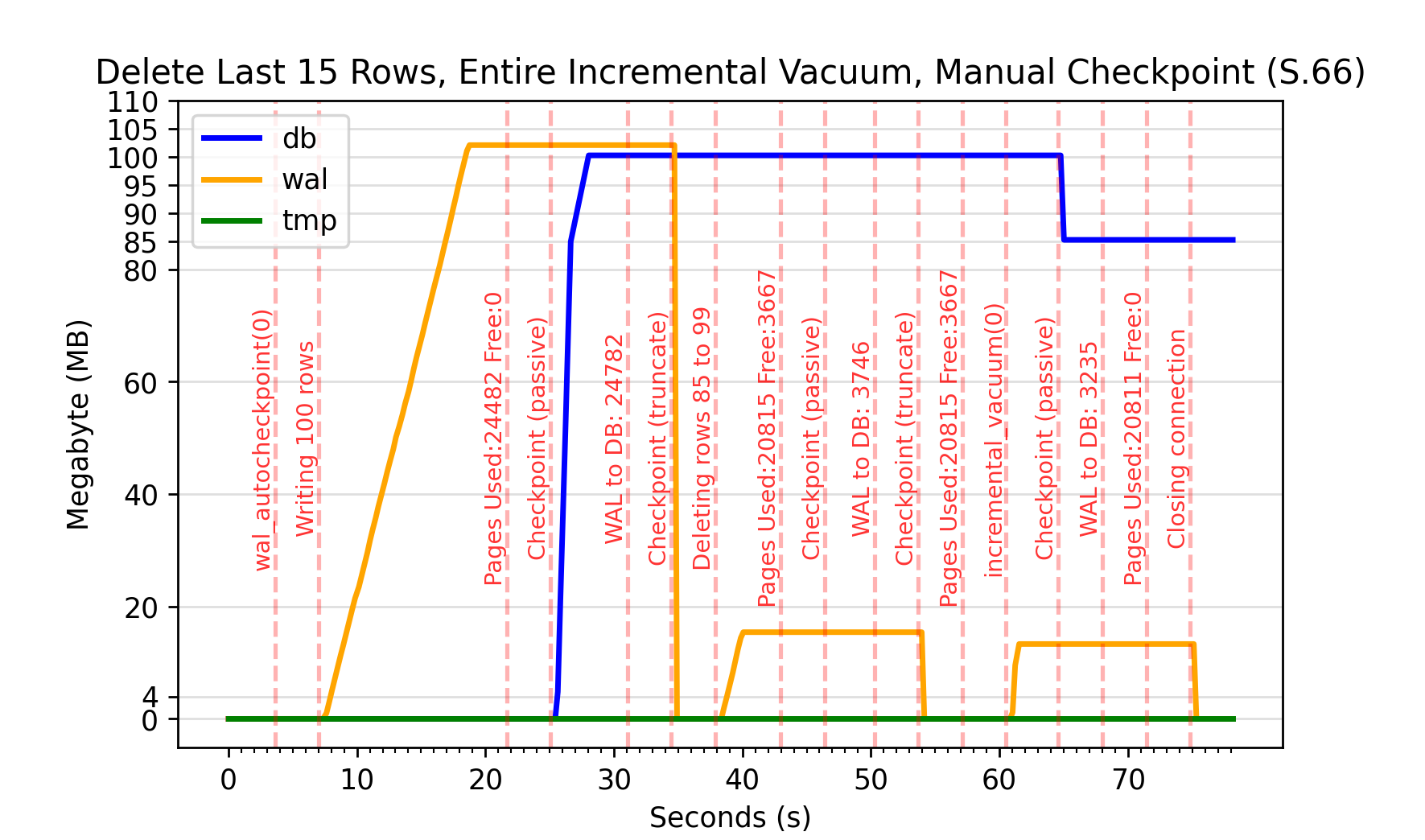

To obtain values for the number of pages moved from the WAL to DB for an entire incremental vacuum I disabled automatic

checkpoint using wal_autocheckpoint(0), deleted the last 15 rows in the database, and called incremental_vacuum(0). In S.66 you can see there were 3667 pages in the freelist at 43 s after deleting the last 15 rows. After the entire incremental vacuum at 61 s and subsequent checkpoint, 3235 pages were reported as moved from the WAL file to the DB.

This is significantly more than the 40 cumulative pages observed when executing multiple granular incremental vacuums of 200 pages in S.65.